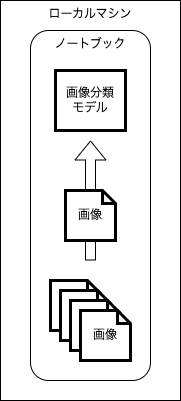

Image Classification Standalone

Mix.install(

[

{:nx, "~> 0.9", override: true},

{:exla, "~> 0.9"},

{:axon_onnx, "~> 0.4", git: "https://github.com/mortont/axon_onnx/"},

{:evision, "~> 0.2"},

{:flow, "~> 1.2"},

{:req, "~> 0.5"},

{:kino, "~> 0.15"}

],

config: [nx: [default_backend: EXLA.Backend]]

)Standalone processing

Load models

Nx.Defn.default_options(compiler: EXLA)

Nx.global_default_backend(EXLA.Backend)classes =

"/tmp/sorabatake/dataset/test"

|> File.ls!()

|> Enum.sort()model_path = "/tmp/sorabatake/efficientnet_v2_m.onnx"

{model, params} = AxonOnnx.import(model_path)Test data

test_files =

classes

|> Enum.flat_map(fn class ->

"/tmp/sorabatake/dataset/test/#{class}"

|> File.ls!()

|> Enum.filter(&String.ends_with?(&1, ".png"))

|> Enum.map(fn filename -> "/tmp/sorabatake/dataset/test/#{class}/#{filename}" end)

end)

|> Enum.sort()test_files

|> Enum.map(fn file_path ->

file_path

|> Evision.imread()

|> Evision.cvtColor(Evision.Constant.cv_COLOR_BGR2RGB())

|> Evision.resize({80, 80})

|> Evision.Mat.to_nx()

|> Kino.Image.new()

end)

|> Kino.Layout.grid(columns: 10)test_files

|> Enum.at(0)

|> Evision.imread()

|> Evision.cvtColor(Evision.Constant.cv_COLOR_BGR2RGB())

|> Evision.resize({224, 224})

|> Evision.Mat.to_nx(EXLA.Backend)

|> Nx.divide(255)

|> Nx.subtract(Nx.tensor([0.485, 0.456, 0.406]))

|> Nx.divide(Nx.tensor([0.229, 0.224, 0.225]))

|> Nx.transpose(axes: [2, 0, 1])

|> then(fn tensor ->

model

|> Axon.predict(params, Nx.Batch.stack([tensor]))

|> Nx.argmax(axis: 1)

|> then(&Nx.to_number(&1[0]))

|> then(&Enum.at(classes, &1))

end)predicted_classes =

test_files

|> Flow.from_enumerable(stages: 4, max_demand: 4)

|> Flow.map(fn file_path ->

predicted =

file_path

|> Evision.imread()

|> Evision.cvtColor(Evision.Constant.cv_COLOR_BGR2RGB())

|> Evision.resize({224, 224})

|> Evision.Mat.to_nx(EXLA.Backend)

|> Nx.divide(255)

|> Nx.subtract(Nx.tensor([0.485, 0.456, 0.406]))

|> Nx.divide(Nx.tensor([0.229, 0.224, 0.225]))

|> Nx.transpose(axes: [2, 0, 1])

|> then(fn tensor ->

model

|> Axon.predict(params, Nx.Batch.stack([tensor]))

|> Nx.argmax(axis: 1)

|> then(&Nx.to_number(&1[0]))

|> then(&Enum.at(classes, &1))

end)

{file_path, predicted}

end)

|> Enum.sort_by(&elem(&1, 0))predicted_classes

|> Enum.map(fn {file_path, predicted_class} ->

if file_path |> String.split("/") |> Enum.at(5) == predicted_class, do: 1, else: 0

end)

|> Enum.sum()predicted_classes

|> Enum.map(fn {file_path, predicted_class} ->

[

Kino.Markdown.new(file_path |> String.split("/") |> Enum.at(5)),

Kino.Markdown.new(predicted_class),

file_path

|> Evision.imread()

|> Evision.cvtColor(Evision.Constant.cv_COLOR_BGR2RGB())

|> Evision.resize({120, 120})

|> Evision.Mat.to_nx()

|> Kino.Image.new()

]

|> Kino.Layout.grid()

end)

|> Kino.Layout.grid(columns: 5)Tellus data

Tellus Satellite Data Traveler API を使用する

API 仕様:

提供:だいち(ALOS) AVNIR-2 データ(JAXA)

だいち(ALOS) AVNIR-2 の仕様はこちら

https://www.eorc.jaxa.jp/ALOS/jp/alos/sensor/avnir2_j.htm

defmodule TellusTraveler do

@base_path "https://www.tellusxdp.com/api/traveler/v1"

@data_path "#{@base_path}/datasets"

defp get_headers(token) do

%{

"Authorization" => "Bearer #{token}",

"Content-Type" => "application/json"

}

end

def get_data_files(token, dataset_id, data_id) do

url = "#{@data_path}/#{dataset_id}/data/#{data_id}/files/"

headers = get_headers(token)

url

|> Req.get!(headers: headers)

|> then(& &1.body["results"])

end

defp get_data_file_download_url(token, dataset_id, data_id, file_id) do

url = "#{@data_path}/#{dataset_id}/data/#{data_id}/files/#{file_id}/download-url/"

headers = get_headers(token)

url

|> Req.post!(headers: headers)

|> then(& &1.body["download_url"])

end

def download(token, dataset_id, scene_id, dist \\ "/tmp/") do

[dist, scene_id]

|> Path.join()

|> File.mkdir_p()

token

|> get_data_files(dataset_id, scene_id)

|> Enum.map(fn file ->

file_path = Path.join([dist, scene_id, file["name"]])

unless File.exists?(file_path) do

token

|> get_data_file_download_url(dataset_id, scene_id, file["id"])

|> Req.get!(into: File.stream!(file_path))

end

file_path

end)

end

end# 【Tellus公式】AVNIR-2_1B1

dataset_id = "ea71ef6e-9569-49fc-be16-ba98d876fb73"

# 2007年7月25日 瀬戸内海

scene_id = "bbf4a49f-2440-4b0f-a964-b64b7c7deacc"# Tellus のトークンを入力する

token_input = Kino.Input.password("Token")file_path_list =

token_input

|> Kino.Input.read()

|> TellusTraveler.download(dataset_id, scene_id)get_band_tensor = fn file_path_list, prefix ->

file_path_list

|> Enum.find(fn file ->

file

|> Path.basename()

|> String.starts_with?(prefix)

end)

|> Evision.imread(flags: Evision.Constant.cv_IMREAD_GRAYSCALE())

|> Evision.Mat.to_nx(EXLA.Backend)

endblue_tensor = get_band_tensor.(file_path_list, "IMG-01")

green_tensor = get_band_tensor.(file_path_list, "IMG-02")

red_tensor = get_band_tensor.(file_path_list, "IMG-03")

rgb_tensor = Nx.stack([red_tensor, green_tensor, blue_tensor], axis: 2)rgb_tensor

|> Evision.Mat.from_nx_2d()

|> Evision.resize({640, 640})

|> Evision.Mat.to_nx()

|> Kino.Image.new()tiled_tensors =

rgb_tensor[[0..-1//1, 0..7199//1, 0..-1//1]]

# 水平に分割

|> Nx.to_batched(500)

# 垂直に分割

|> Enum.map(&Nx.transpose(&1, axes: [1, 0, 2]))

|> Enum.flat_map(&Nx.to_batched(&1, 450))

|> Enum.map(&Nx.transpose(&1, axes: [1, 0, 2]))

|> Enum.map(fn tensor ->

tensor

|> Evision.Mat.from_nx_2d()

|> Evision.resize({224, 224})

|> Evision.Mat.to_nx(EXLA.Backend)

end)tiled_tensors

|> Enum.map(fn tensor ->

tensor

|> Evision.Mat.from_nx_2d()

|> Evision.resize({40, 40})

|> Evision.Mat.to_nx()

|> Kino.Image.new()

end)

|> Kino.Layout.grid(columns: 16)Batch Prediction with module

batch_size = 4defmodule EfficientNetV2 do

import Nx.Defn

defn normalize(tensor) do

(tensor - Nx.tensor([0.485, 0.456, 0.406])) / Nx.tensor([0.229, 0.224, 0.225])

end

defn transform_for_input(img_tensor) do

(img_tensor / 255)

|> normalize()

|> Nx.transpose(axes: [2, 0, 1])

end

defn get_top_class_index(outputs) do

Nx.argmax(outputs, axis: 1)

end

defn softmax(tensor) do

Nx.exp(tensor) / Nx.sum(Nx.exp(tensor), axes: [-1], keep_axes: true)

end

defn get_top_class_score(outputs) do

outputs

|> softmax()

|> Nx.reduce_max(axes: [-1])

end

def preprocess(tensor_list, batch_size) do

tensor_list

|> Enum.map(fn tensor ->

transform_for_input(tensor)

end)

|> Nx.Batch.stack()

|> Nx.Batch.pad(batch_size - Enum.count(tensor_list))

end

def postprocess(outputs, classes) do

predicted_classes = get_top_class_index(outputs)

predicted_scores = get_top_class_score(outputs)

output_size =

outputs

|> Nx.shape()

|> elem(0)

0..(output_size - 1)

|> Enum.to_list()

|> Enum.map(fn index ->

predicted_class =

predicted_classes[index]

|> Nx.to_number()

|> then(&Enum.at(classes, &1))

predicted_score = Nx.to_number(predicted_scores[index])

%{

predicted_class: predicted_class,

predicted_score: predicted_score

}

end)

end

def predict(input_batch, model, params) do

Axon.predict(model, params, input_batch)

end

endpredictions =

tiled_tensors

|> Enum.with_index()

|> Enum.chunk_every(batch_size)

|> Flow.from_enumerable(stages: 4, max_demand: 4)

|> Flow.map(fn batch ->

tensor_list = Enum.map(batch, &elem(&1, 0))

index_list = Enum.map(batch, &elem(&1, 1))

index_list

|> Enum.at(0)

|> IO.inspect()

tensor_list

|> EfficientNetV2.preprocess(batch_size)

|> EfficientNetV2.predict(model, params)

|> EfficientNetV2.postprocess(classes)

|> Enum.zip(index_list)

|> Enum.map(fn {prediction, index} ->

Map.put_new(prediction, :index, index)

end)

end)

|> Enum.to_list()

|> List.flatten()

|> Enum.sort(&(&1.index < &2.index))color_map = %{

"clear" => [0, 0, 255],

"cloudy" => [255, 255, 255]

}color_map

|> Map.get("clear")

|> Nx.tensor(type: :u8)

|> Nx.broadcast({40, 40, 3})

|> Kino.Image.new()[

rgb_tensor

|> Evision.Mat.from_nx_2d()

|> Evision.resize({640, 640})

|> Evision.Mat.to_nx()

|> Kino.Image.new(),

predictions

|> Enum.map(fn prediction ->

color_map

|> Map.get(prediction.predicted_class)

|> Nx.tensor(type: :u8)

|> Nx.broadcast({40, 40, 3})

|> Kino.Image.new()

end)

|> Kino.Layout.grid(columns: 16)

]

|> Kino.Layout.grid(columns: 2)